AI Policies Under the Microscope: How aligned are they with the Public?

Adapts to AI Advancements

High-impact policies are those that grow increasingly relevant as AI systems improve and exert greater influence on the economy and society. The most important regulations will not only focus on trying to solve the problems associated with current AI, but will focus on the problems that may arise as AI becomes more powerful. They must treat AI as a transformative technology rather than merely a piece of consumer technology.

The public overwhelmingly supports this approach, favoring regulations that “prepare for more powerful models” over regulations which “only focus on models that exist today” (67-13). The public even prioritizes regulation around these more dangerous models, favoring focusing on “More dangerous but unknown threats” over “Weaker but known threats” (46-22).

Discouraging High-Risk AI Deployment

Imposing costs on the deployment of risky AI technologies can both temper the pace of capability growth and encourage the development of safer systems. It’s important that AI companies are not able to release dangerous models without bearing the cost when those models go dangerously wrong.

The public feels similarly, favoring regulations which make it “more difficult to release powerful AI” (66-14).

Reduces proliferation of dangerous AI

Effective safeguards must not only directly address misuses – such as AI use for bioterrorism – but also prevent circumvention of safeguards by malicious or reckless actors. It is therefore crucial that policies restrict the availability of modifiable base models, which could otherwise easily be modified for misuse.

The public supports this principle even when confronted with relevant tradeoffs. They place much greater importance on “keeping AI out of the hands of bad actors” than “providing benefits of AI to everyone” (65-22), and, more specifically, they would strongly prefer not to allow powerful models to be open sourced (47-23).

Affects model training

Potential AI dangers are not confined to deployment; they may also manifest during the training phase. AI models may become dangerous before they are released to the public or are easily acquired by adversarial actors – already, cutting-edge AI labs are evaluating their models for the ability to set up open-source copies on new servers. Legislation is therefore more beneficial if it impacts the training process.

The public also supports this approach, favoring regulation of models at creation and not just usage (65-12).

Restricts Capability Escalation

As AI capabilities increase at an unprecedented rate, regulations that explicitly limit the scope of these capabilities can mitigate potential risks. It may be especially beneficial to have regulations that explicitly restrict models based on their capabilities or the amount of computational resources used to produce them.

The public shares this perspective, favoring explicit restrictions on how powerful models can become (67-14).

Addresses current vulnerabilities and dangers

While the most extreme AI threats may be prospective, current dangers – such as propaganda and AI-assisted hacking – should not be overlooked.

Supporting this point, the public backs regulations of current state-of-the-art models (66-14).

Prevents or delays superintelligent AI

Some researchers and organizations are actively striving to create AI systems with capabilities far surpassing human abilities in general, but it is crucial to proceed with extreme caution in this domain. Policy which would effectively prevent superintelligent AI could force us to move slowly and deliberately.

This principle hits a chord with the public; they support actively preventing superintelligent AI as a goal of AI policy (63-16).

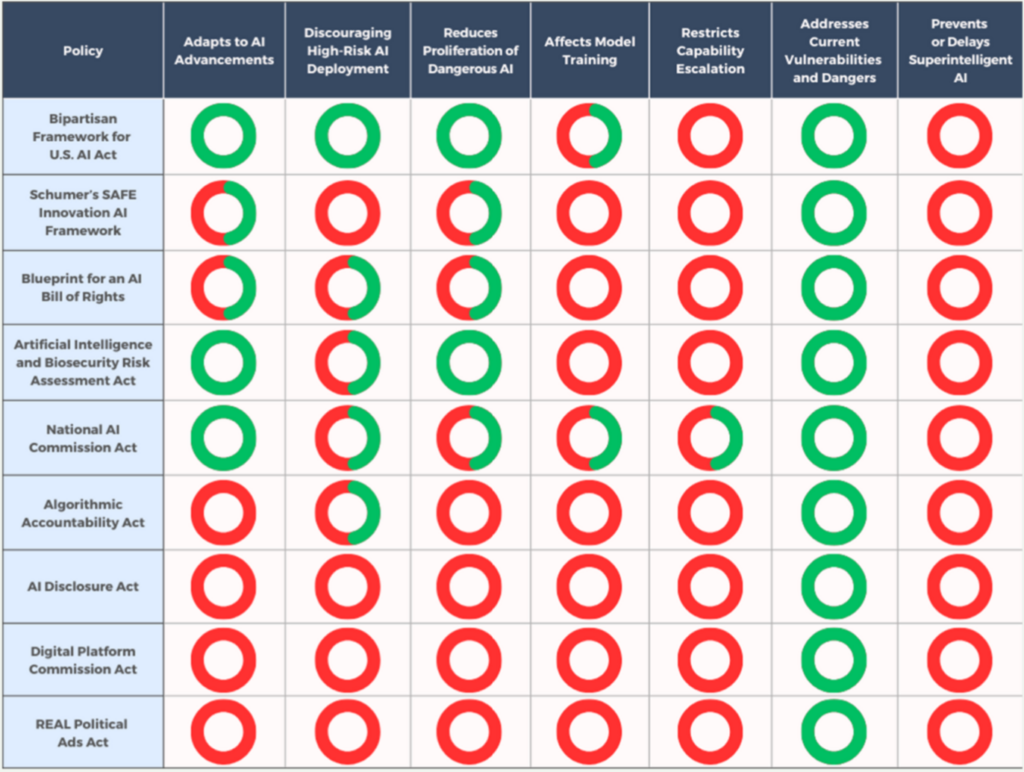

Policy Evaluation:

We examine proposed AI policies in the context of the above attributes and describe how different policies would or wouldn’t embody these attributes.

In general, policies proposed to date focus more on addressing immediate harms. They predominantly frame AI as a normal consumer technology, rather than a game-changing advancement.

Notably, few policies do take steps to restrict the most hazardous capabilities of advanced AI systems. In particular, the Bipartisan Framework for U.S. AI Act includes a licensing and auditing regime which could limit risky deployment and proliferation, and the Artificial Intelligence and Biosecurity Risk Assessment Act addresses biological attacks.

None of the policies outlined below explicitly restrict capability escalation or super intelligence.

Below, we delve into summaries of individual legislative efforts and frameworks, evaluating their agreement with the above publicly-supported attributes on AI policy.

Bipartisan Framework for U.S. AI Act

This framework calls for a licensing and auditing regime for powerful AI models, liability clarification (bringing in the substance of the No Section 230 Immunity for AI Act), export controls to limit China, along with consumer facing disclosures.

The licensing regime administered by an independent oversight body is particularly important, treating AI as a transformative force. Licensing and public auditing of powerful models could make it more difficult for actors to release dangerous models and reduce proliferation of such models. However, it’s unclear how strong the teeth of this regime will be in final legislation.

The framework also addresses liability, clarifying that Section 230 does not apply to AI. Stringent liability could punish companies for failing to control today’s potential failures such as misinformation, and catastrophic failures including the development of bioweapons.

Schumer’s SAFE Innovation AI framework:

Senator Chuck Schumer’s proposed SAFE Innovation (Security, Accountability, Foundations, Explain, Innovation) AI framework is in an early stage right now, and ultimately it could go in a number of different directions.

Currently, the framework is very focused on security from adversaries, but not as focused on misuse or negligent/reckless use within the United States. The framework includes an accountability goal which covers immediate risks such as misinformation and bias. Security from adversaries is important, as is addressing immediate problems, but the act will benefit greatly if it takes greater account of domestic accidental and purposeful misuse, as well as longer-term harms.

Blueprint for an AI Bill of Rights

While not a binding policy, the White House’s Blueprint serves as a guiding framework that outlines potential consumer harms, focusing mainly on current issues like discrimination and privacy. However, it does include a provision for safe systems, which, if elaborated, could form the basis for future policies addressing more perilous AI applications.

Artificial Intelligence and Biosecurity Risk Assessment Act:

This legislation instructs the Assistant Secretary for Preparedness and Response (ASPR) to conduct risk assessments on AI’s role in bioweapons development. The act thereby acknowledges the potentially catastrophic implications of AI, paving the way for future policy actions. It also explicitly addresses proliferation, by focusing on open source models as a threat vector. If the recommendations are strong, it could impact capability escalation and deployment. However, the time required for assessment and subsequent policy development remains an open question.

This act proposes establishing a commission to study AI risks and to recommend an appropriate regulatory structure. While the act won’t produce immediate regulations, an effective commission could catalyze meaningful future legislation that focuses on catastrophic dangers, as well as risk factors such as model proliferation.

Algorithmic Accountability Act:

This bill mandates that companies using algorithms for crucial services conduct comprehensive impact assessments. These evaluations must confirm that the algorithms operate as intended, gauge potential adverse effects, and detail consumer rights protections, including privacy measures.

While the act imposes some costs by requiring assessments not mandated for human decision-making, its primary objective is to uncover inadvertently biased algorithms, and it is unlikely to have far-reaching implications for other undesirable model behavior. The focus remains on addressing immediate, rather than transformative, AI concerns.

Introduced by Representative Ritchie Torres, this act requires AI-generated content to disclose its artificial origin. While it could mitigate short-term hazards like propaganda, the act does not address the transformative nature of AI. Further, as the act does not address the proliferation of AI models, it’s possible that bad actors may be able to circumvent this legislation.

Digital Platform Commission Act

Senators Michael Bennet and Peter Welch propose a commission to regulate digital platforms, including social media. While the act could curb harmful AI usage in consumer platforms, it doesn’t address AI’s potential as a transformative technology.

This legislation by Senators Amy Klobuchar, Cory Booker, and Michael Bennet targets AI-generated political ads, requiring them to disclose their artificial origins. This act could reduce the ads’ efficacy and thus their utilization. It is narrowly targeted on this particular, currently possible class of misuse.